Project flow#

LaminDB allows tracking data flow on the entire project level.

Here, we walk through exemplified app uploads, pipelines & notebooks following Schmidt et al., 2022.

A CRISPR screen reading out a phenotypic endpoint on T cells is paired with scRNA-seq to generate insights into IFN-γ production.

These insights get linked back to the original data through the steps taken in the project to provide context for interpretation & future decision making.

More specifically: Why should I care about data flow?

Data flow tracks data sources & transformations to trace biological insights, verify experimental outcomes, meet regulatory standards, increase the robustness of research and optimize the feedback loop of team-wide learning iterations.

While tracking data flow is easier when it’s governed by deterministic pipelines, it becomes hard when it’s governed by interactive human-driven analyses.

LaminDB interfaces workflow mangers for the former and embraces the latter.

Setup#

Init a test instance:

!lamin init --storage ./mydata

Show code cell output

💡 creating schemas: core==0.46.3

✅ saved: User(id='DzTjkKse', handle='testuser1', email='testuser1@lamin.ai', name='Test User1', updated_at=2023-08-31 00:33:55)

✅ saved: Storage(id='drYMVIZX', root='/home/runner/work/lamin-usecases/lamin-usecases/docs/mydata', type='local', updated_at=2023-08-31 00:33:55, created_by_id='DzTjkKse')

✅ loaded instance: testuser1/mydata

💡 did not register local instance on hub (if you want, call `lamin register`)

Import lamindb:

import lamindb as ln

from IPython.display import Image, display

✅ loaded instance: testuser1/mydata (lamindb 0.51.2)

Steps#

In the following, we walk through exemplified steps covering different types of transforms (Transform).

Note

The full notebooks are in this repository.

App upload of phenotypic data  #

#

Register data through app upload from wetlab by testuser1:

ln.setup.login("testuser1")

transform = ln.Transform(name="Upload GWS CRISPRa result", type="app")

ln.track(transform)

output_path = ln.dev.datasets.schmidt22_crispra_gws_IFNG(ln.settings.storage)

output_file = ln.File(output_path, description="Raw data of schmidt22 crispra GWS")

output_file.save()

Show code cell output

✅ logged in with email testuser1@lamin.ai and id DzTjkKse

✅ saved: Transform(id='FyNGHYEYmulKHp', name='Upload GWS CRISPRa result', type='app', updated_at=2023-08-31 00:33:57, created_by_id='DzTjkKse')

✅ saved: Run(id='LhTHTlALeDgHOAiN0cCc', run_at=2023-08-31 00:33:57, transform_id='FyNGHYEYmulKHp', created_by_id='DzTjkKse')

💡 file in storage 'mydata' with key 'schmidt22-crispra-gws-IFNG.csv'

Hit identification in notebook  #

#

Access, transform & register data in drylab by testuser2:

ln.setup.login("testuser2")

transform = ln.Transform(name="GWS CRIPSRa analysis", type="notebook")

ln.track(transform)

# access

input_file = ln.File.filter(key="schmidt22-crispra-gws-IFNG.csv").one()

# identify hits

input_df = input_file.load().set_index("id")

output_df = input_df[input_df["pos|fdr"] < 0.01].copy()

# register hits in output file

ln.File(output_df, description="hits from schmidt22 crispra GWS").save()

Show code cell output

✅ logged in with email testuser2@lamin.ai and id bKeW4T6E

❗ record with similar name exist! did you mean to load it?

| id | __ratio__ | |

|---|---|---|

| name | ||

| Test User1 | DzTjkKse | 90.0 |

✅ saved: User(id='bKeW4T6E', handle='testuser2', email='testuser2@lamin.ai', name='Test User2', updated_at=2023-08-31 00:33:59)

✅ saved: Transform(id='QfaaiH3PgXD5lo', name='GWS CRIPSRa analysis', type='notebook', updated_at=2023-08-31 00:33:59, created_by_id='bKeW4T6E')

✅ saved: Run(id='8Lxcoit3CY4XURcUGREQ', run_at=2023-08-31 00:33:59, transform_id='QfaaiH3PgXD5lo', created_by_id='bKeW4T6E')

💡 adding file Ge8qB5KBQNCeW0RNk5H0 as input for run 8Lxcoit3CY4XURcUGREQ, adding parent transform FyNGHYEYmulKHp

💡 file will be copied to default storage upon `save()` with key `None` ('.lamindb/zG2EmxGOmuCj0aZnFqBa.parquet')

💡 data is a dataframe, consider using .from_df() to link column names as features

✅ storing file 'zG2EmxGOmuCj0aZnFqBa' at '.lamindb/zG2EmxGOmuCj0aZnFqBa.parquet'

Inspect data flow:

file = ln.File.filter(description="hits from schmidt22 crispra GWS").one()

file.view_flow()

Sequencer upload  #

#

Upload files from sequencer:

ln.setup.login("testuser1")

ln.track(ln.Transform(name="Chromium 10x upload", type="pipeline"))

# register output files of upload

upload_dir = ln.dev.datasets.dir_scrnaseq_cellranger(

"perturbseq", basedir=ln.settings.storage, output_only=False

)

ln.File(upload_dir.parent / "fastq/perturbseq_R1_001.fastq.gz").save()

ln.File(upload_dir.parent / "fastq/perturbseq_R2_001.fastq.gz").save()

ln.setup.login("testuser2")

Show code cell output

✅ logged in with email testuser1@lamin.ai and id DzTjkKse

✅ saved: Transform(id='asCuZcubwaipQv', name='Chromium 10x upload', type='pipeline', updated_at=2023-08-31 00:34:00, created_by_id='DzTjkKse')

✅ saved: Run(id='wL9sxwcZ1MV3iVbhr7yt', run_at=2023-08-31 00:34:00, transform_id='asCuZcubwaipQv', created_by_id='DzTjkKse')

💡 file in storage 'mydata' with key 'fastq/perturbseq_R1_001.fastq.gz'

💡 file in storage 'mydata' with key 'fastq/perturbseq_R2_001.fastq.gz'

✅ logged in with email testuser2@lamin.ai and id bKeW4T6E

scRNA-seq bioinformatics pipeline  #

#

Process uploaded files using a script or workflow manager: Pipelines and obtain 3 output files in a directory filtered_feature_bc_matrix/:

transform = ln.Transform(name="Cell Ranger", version="7.2.0", type="pipeline")

ln.track(transform)

# access uploaded files as inputs for the pipeline

input_files = ln.File.filter(key__startswith="fastq/perturbseq").all()

input_paths = [file.stage() for file in input_files]

# register output files

output_files = ln.File.from_dir("./mydata/perturbseq/filtered_feature_bc_matrix/")

ln.save(output_files)

Show code cell output

✅ saved: Transform(id='wlqQRtbMVc8hwl', name='Cell Ranger', version='7.2.0', type='pipeline', updated_at=2023-08-31 00:34:01, created_by_id='bKeW4T6E')

✅ saved: Run(id='D8Ow7O5itlCJDo01GFGL', run_at=2023-08-31 00:34:01, transform_id='wlqQRtbMVc8hwl', created_by_id='bKeW4T6E')

💡 adding file 2IVTqadSj7LlJ3RLzDK2 as input for run D8Ow7O5itlCJDo01GFGL, adding parent transform asCuZcubwaipQv

💡 adding file WXy5qOTYuITD6M2FF3ZV as input for run D8Ow7O5itlCJDo01GFGL, adding parent transform asCuZcubwaipQv

✅ created 3 files from directory using storage /home/runner/work/lamin-usecases/lamin-usecases/docs/mydata and key = perturbseq/filtered_feature_bc_matrix/

Post-process these 3 files:

transform = ln.Transform(name="Postprocess Cell Ranger", version="2.0", type="pipeline")

ln.track(transform)

input_files = [f.stage() for f in output_files]

output_path = ln.dev.datasets.schmidt22_perturbseq(basedir=ln.settings.storage)

output_file = ln.File(output_path, description="perturbseq counts")

output_file.save()

Show code cell output

✅ saved: Transform(id='1I1vsRml40e61j', name='Postprocess Cell Ranger', version='2.0', type='pipeline', updated_at=2023-08-31 00:34:01, created_by_id='bKeW4T6E')

✅ saved: Run(id='tffplIqlaB6zIxUFqW6r', run_at=2023-08-31 00:34:01, transform_id='1I1vsRml40e61j', created_by_id='bKeW4T6E')

💡 adding file tc8B6nRZg6Df0MLjcSK0 as input for run tffplIqlaB6zIxUFqW6r, adding parent transform wlqQRtbMVc8hwl

💡 adding file jHWdtR54tOOkYxeZCY0X as input for run tffplIqlaB6zIxUFqW6r, adding parent transform wlqQRtbMVc8hwl

💡 adding file frtCfY668LVsP0oPVtDR as input for run tffplIqlaB6zIxUFqW6r, adding parent transform wlqQRtbMVc8hwl

💡 file in storage 'mydata' with key 'schmidt22_perturbseq.h5ad'

💡 data is AnnDataLike, consider using .from_anndata() to link var_names and obs.columns as features

Inspect data flow:

output_files[0].view_flow()

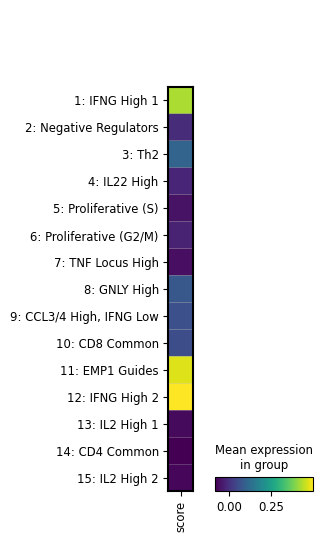

Integrate scRNA-seq & phenotypic data  #

#

Integrate data in a notebook:

transform = ln.Transform(

name="Perform single cell analysis, integrate with CRISPRa screen",

type="notebook",

)

ln.track(transform)

file_ps = ln.File.filter(description__icontains="perturbseq").one()

adata = file_ps.load()

file_hits = ln.File.filter(description="hits from schmidt22 crispra GWS").one()

screen_hits = file_hits.load()

import scanpy as sc

sc.tl.score_genes(adata, adata.var_names.intersection(screen_hits.index).tolist())

filesuffix = "_fig1_score-wgs-hits.png"

sc.pl.umap(adata, color="score", show=False, save=filesuffix)

filepath = f"figures/umap{filesuffix}"

file = ln.File(filepath, key=filepath)

file.save()

filesuffix = "fig2_score-wgs-hits-per-cluster.png"

sc.pl.matrixplot(

adata, groupby="cluster_name", var_names=["score"], show=False, save=filesuffix

)

filepath = f"figures/matrixplot_{filesuffix}"

file = ln.File(filepath, key=filepath)

file.save()

Show code cell output

✅ saved: Transform(id='bpARMLxORj3ESM', name='Perform single cell analysis, integrate with CRISPRa screen', type='notebook', updated_at=2023-08-31 00:34:02, created_by_id='bKeW4T6E')

✅ saved: Run(id='F1CQ72KwL6O3rJMnXCGg', run_at=2023-08-31 00:34:02, transform_id='bpARMLxORj3ESM', created_by_id='bKeW4T6E')

💡 adding file nbusHDpk8XRUhwwcbprI as input for run F1CQ72KwL6O3rJMnXCGg, adding parent transform 1I1vsRml40e61j

💡 adding file zG2EmxGOmuCj0aZnFqBa as input for run F1CQ72KwL6O3rJMnXCGg, adding parent transform QfaaiH3PgXD5lo

/opt/hostedtoolcache/Python/3.9.17/x64/lib/python3.9/site-packages/anndata/_core/anndata.py:1113: FutureWarning: is_categorical_dtype is deprecated and will be removed in a future version. Use isinstance(dtype, CategoricalDtype) instead

if not is_categorical_dtype(df_full[k]):

/opt/hostedtoolcache/Python/3.9.17/x64/lib/python3.9/site-packages/anndata/_core/anndata.py:1113: FutureWarning: is_categorical_dtype is deprecated and will be removed in a future version. Use isinstance(dtype, CategoricalDtype) instead

if not is_categorical_dtype(df_full[k]):

/opt/hostedtoolcache/Python/3.9.17/x64/lib/python3.9/site-packages/scanpy/plotting/_tools/scatterplots.py:1207: FutureWarning: is_categorical_dtype is deprecated and will be removed in a future version. Use isinstance(dtype, CategoricalDtype) instead

if not is_categorical_dtype(values):

WARNING: saving figure to file figures/umap_fig1_score-wgs-hits.png

💡 file will be copied to default storage upon `save()` with key 'figures/umap_fig1_score-wgs-hits.png'

✅ storing file 'VfsYXOqdm6g6w1UF0kjo' at 'figures/umap_fig1_score-wgs-hits.png'

/opt/hostedtoolcache/Python/3.9.17/x64/lib/python3.9/site-packages/scanpy/plotting/_matrixplot.py:143: FutureWarning: The default of observed=False is deprecated and will be changed to True in a future version of pandas. Pass observed=False to retain current behavior or observed=True to adopt the future default and silence this warning.

values_df = self.obs_tidy.groupby(level=0).mean()

WARNING: saving figure to file figures/matrixplot_fig2_score-wgs-hits-per-cluster.png

💡 file will be copied to default storage upon `save()` with key 'figures/matrixplot_fig2_score-wgs-hits-per-cluster.png'

✅ storing file 'JFpwiVrfA4QfMAtTKXig' at 'figures/matrixplot_fig2_score-wgs-hits-per-cluster.png'

Review results#

Let’s load one of the plots:

ln.track()

file = ln.File.filter(key__contains="figures/matrixplot").one()

file.stage()

Show code cell output

💡 notebook imports: ipython==8.14.0 lamindb==0.51.2 scanpy==1.9.4

✅ saved: Transform(id='1LCd8kco9lZUz8', name='Project flow', short_name='project-flow', version='0', type=notebook, updated_at=2023-08-31 00:34:04, created_by_id='bKeW4T6E')

✅ saved: Run(id='DQmpwQr2V2aAGnQYAFzb', run_at=2023-08-31 00:34:04, transform_id='1LCd8kco9lZUz8', created_by_id='bKeW4T6E')

💡 adding file JFpwiVrfA4QfMAtTKXig as input for run DQmpwQr2V2aAGnQYAFzb, adding parent transform bpARMLxORj3ESM

PosixUPath('/home/runner/work/lamin-usecases/lamin-usecases/docs/mydata/figures/matrixplot_fig2_score-wgs-hits-per-cluster.png')

display(Image(filename=file.path))

We see that the image file is tracked as an input of the current notebook. The input is highlighted, the notebook follows at the bottom:

file.view_flow()

Alternatively, we can also look at the sequence of transforms:

transform = ln.Transform.search("Bird's eye view", return_queryset=True).first()

transform.parents.df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| wlqQRtbMVc8hwl | Cell Ranger | None | 7.2.0 | None | pipeline | None | 2023-08-31 00:34:01 | bKeW4T6E |

transform.view_parents()

Understand runs#

We tracked pipeline and notebook runs through run_context, which stores a Transform and a Run record as a global context.

File objects are the inputs and outputs of runs.

What if I don’t want a global context?

Sometimes, we don’t want to create a global run context but manually pass a run when creating a file:

run = ln.Run(transform=transform)

ln.File(filepath, run=run)

When does a file appear as a run input?

When accessing a file via stage(), load() or backed(), two things happen:

The current run gets added to

file.input_ofThe transform of that file gets added as a parent of the current transform

You can then switch off auto-tracking of run inputs if you set ln.settings.track_run_inputs = False: Can I disable tracking run inputs?

You can also track run inputs on a case by case basis via is_run_input=True, e.g., here:

file.load(is_run_input=True)

Query by provenance#

We can query or search for the notebook that created the file:

transform = ln.Transform.search("GWS CRIPSRa analysis", return_queryset=True).first()

And then find all the files created by that notebook:

ln.File.filter(transform=transform).df()

| storage_id | key | suffix | accessor | description | version | initial_version_id | size | hash | hash_type | transform_id | run_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| zG2EmxGOmuCj0aZnFqBa | drYMVIZX | None | .parquet | DataFrame | hits from schmidt22 crispra GWS | None | None | 18368 | yFC1asXuK86w1NOBD_4dgw | md5 | QfaaiH3PgXD5lo | 8Lxcoit3CY4XURcUGREQ | 2023-08-31 00:33:59 | bKeW4T6E |

Which transform ingested a given file?

file = ln.File.filter().first()

file.transform

Transform(id='FyNGHYEYmulKHp', name='Upload GWS CRISPRa result', type='app', updated_at=2023-08-31 00:33:58, created_by_id='DzTjkKse')

And which user?

file.created_by

User(id='DzTjkKse', handle='testuser1', email='testuser1@lamin.ai', name='Test User1', updated_at=2023-08-31 00:34:00)

Which transforms were created by a given user?

users = ln.User.lookup()

ln.Transform.filter(created_by=users.testuser2).df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| QfaaiH3PgXD5lo | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-31 00:33:59 | bKeW4T6E |

| wlqQRtbMVc8hwl | Cell Ranger | None | 7.2.0 | None | pipeline | None | 2023-08-31 00:34:01 | bKeW4T6E |

| 1I1vsRml40e61j | Postprocess Cell Ranger | None | 2.0 | None | pipeline | None | 2023-08-31 00:34:02 | bKeW4T6E |

| bpARMLxORj3ESM | Perform single cell analysis, integrate with C... | None | None | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

| 1LCd8kco9lZUz8 | Project flow | project-flow | 0 | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

Which notebooks were created by a given user?

ln.Transform.filter(created_by=users.testuser2, type="notebook").df()

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| QfaaiH3PgXD5lo | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-31 00:33:59 | bKeW4T6E |

| bpARMLxORj3ESM | Perform single cell analysis, integrate with C... | None | None | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

| 1LCd8kco9lZUz8 | Project flow | project-flow | 0 | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

We can also view all recent additions to the entire database:

ln.view()

Show code cell output

File

| storage_id | key | suffix | accessor | description | version | initial_version_id | size | hash | hash_type | transform_id | run_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| JFpwiVrfA4QfMAtTKXig | drYMVIZX | figures/matrixplot_fig2_score-wgs-hits-per-clu... | .png | None | None | None | None | 28814 | JYIPcat0YWYVCX3RVd3mww | md5 | bpARMLxORj3ESM | F1CQ72KwL6O3rJMnXCGg | 2023-08-31 00:34:04 | bKeW4T6E |

| VfsYXOqdm6g6w1UF0kjo | drYMVIZX | figures/umap_fig1_score-wgs-hits.png | .png | None | None | None | None | 118999 | laQjVk4gh70YFzaUyzbUNg | md5 | bpARMLxORj3ESM | F1CQ72KwL6O3rJMnXCGg | 2023-08-31 00:34:04 | bKeW4T6E |

| nbusHDpk8XRUhwwcbprI | drYMVIZX | schmidt22_perturbseq.h5ad | .h5ad | AnnData | perturbseq counts | None | None | 20659936 | la7EvqEUMDlug9-rpw-udA | md5 | 1I1vsRml40e61j | tffplIqlaB6zIxUFqW6r | 2023-08-31 00:34:02 | bKeW4T6E |

| jHWdtR54tOOkYxeZCY0X | drYMVIZX | perturbseq/filtered_feature_bc_matrix/features... | .tsv.gz | None | None | None | None | 6 | 9Ff1enEdxSeJZiCzNjWEIw | md5 | wlqQRtbMVc8hwl | D8Ow7O5itlCJDo01GFGL | 2023-08-31 00:34:01 | bKeW4T6E |

| tc8B6nRZg6Df0MLjcSK0 | drYMVIZX | perturbseq/filtered_feature_bc_matrix/barcodes... | .tsv.gz | None | None | None | None | 6 | us5fmPRx-dZCmz3qeJyUjQ | md5 | wlqQRtbMVc8hwl | D8Ow7O5itlCJDo01GFGL | 2023-08-31 00:34:01 | bKeW4T6E |

| frtCfY668LVsP0oPVtDR | drYMVIZX | perturbseq/filtered_feature_bc_matrix/matrix.m... | .mtx.gz | None | None | None | None | 6 | VmL8mSTO7y9XQ26meAiIdg | md5 | wlqQRtbMVc8hwl | D8Ow7O5itlCJDo01GFGL | 2023-08-31 00:34:01 | bKeW4T6E |

| WXy5qOTYuITD6M2FF3ZV | drYMVIZX | fastq/perturbseq_R2_001.fastq.gz | .fastq.gz | None | None | None | None | 6 | GuuBdfyqMwF3kaianfWcpw | md5 | asCuZcubwaipQv | wL9sxwcZ1MV3iVbhr7yt | 2023-08-31 00:34:00 | DzTjkKse |

Run

| transform_id | run_at | created_by_id | reference | reference_type | |

|---|---|---|---|---|---|

| id | |||||

| LhTHTlALeDgHOAiN0cCc | FyNGHYEYmulKHp | 2023-08-31 00:33:57 | DzTjkKse | None | None |

| 8Lxcoit3CY4XURcUGREQ | QfaaiH3PgXD5lo | 2023-08-31 00:33:59 | bKeW4T6E | None | None |

| wL9sxwcZ1MV3iVbhr7yt | asCuZcubwaipQv | 2023-08-31 00:34:00 | DzTjkKse | None | None |

| D8Ow7O5itlCJDo01GFGL | wlqQRtbMVc8hwl | 2023-08-31 00:34:01 | bKeW4T6E | None | None |

| tffplIqlaB6zIxUFqW6r | 1I1vsRml40e61j | 2023-08-31 00:34:01 | bKeW4T6E | None | None |

| F1CQ72KwL6O3rJMnXCGg | bpARMLxORj3ESM | 2023-08-31 00:34:02 | bKeW4T6E | None | None |

| DQmpwQr2V2aAGnQYAFzb | 1LCd8kco9lZUz8 | 2023-08-31 00:34:04 | bKeW4T6E | None | None |

Storage

| root | type | region | updated_at | created_by_id | |

|---|---|---|---|---|---|

| id | |||||

| drYMVIZX | /home/runner/work/lamin-usecases/lamin-usecase... | local | None | 2023-08-31 00:33:55 | DzTjkKse |

Transform

| name | short_name | version | initial_version_id | type | reference | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| 1LCd8kco9lZUz8 | Project flow | project-flow | 0 | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

| bpARMLxORj3ESM | Perform single cell analysis, integrate with C... | None | None | None | notebook | None | 2023-08-31 00:34:04 | bKeW4T6E |

| 1I1vsRml40e61j | Postprocess Cell Ranger | None | 2.0 | None | pipeline | None | 2023-08-31 00:34:02 | bKeW4T6E |

| wlqQRtbMVc8hwl | Cell Ranger | None | 7.2.0 | None | pipeline | None | 2023-08-31 00:34:01 | bKeW4T6E |

| asCuZcubwaipQv | Chromium 10x upload | None | None | None | pipeline | None | 2023-08-31 00:34:00 | DzTjkKse |

| QfaaiH3PgXD5lo | GWS CRIPSRa analysis | None | None | None | notebook | None | 2023-08-31 00:33:59 | bKeW4T6E |

| FyNGHYEYmulKHp | Upload GWS CRISPRa result | None | None | None | app | None | 2023-08-31 00:33:58 | DzTjkKse |

User

| handle | name | updated_at | ||

|---|---|---|---|---|

| id | ||||

| bKeW4T6E | testuser2 | testuser2@lamin.ai | Test User2 | 2023-08-31 00:34:01 |

| DzTjkKse | testuser1 | testuser1@lamin.ai | Test User1 | 2023-08-31 00:34:00 |

Show code cell content

!lamin login testuser1

!lamin delete --force mydata

!rm -r ./mydata

✅ logged in with email testuser1@lamin.ai and id DzTjkKse

💡 deleting instance testuser1/mydata

✅ deleted instance settings file: /home/runner/.lamin/instance--testuser1--mydata.env

✅ instance cache deleted

✅ deleted '.lndb' sqlite file

❗ consider manually deleting your stored data: /home/runner/work/lamin-usecases/lamin-usecases/docs/mydata